Mental health technology has emerged as a transformative force in addressing the global mental health crisis, with artificial intelligence-powered applications and digital therapeutics offering unprecedented opportunities to democratize access to psychological support. The rapid expansion of this field, accelerated by the COVID-19 pandemic, has generated both remarkable promise and significant concerns about privacy, efficacy, and regulatory oversight. This comprehensive analysis examines the current landscape of mental health technology, drawing from recent systematic reviews, clinical trials, policy documents, and real-world implementation data to provide an evidence-based assessment of opportunities and risks in this rapidly evolving domain.

🎧 Listen to the Article

Experience the content in audio form — perfect for learning on the go.

Current Landscape of Mental Health Technologies

AI-Powered Interventions and Chatbots

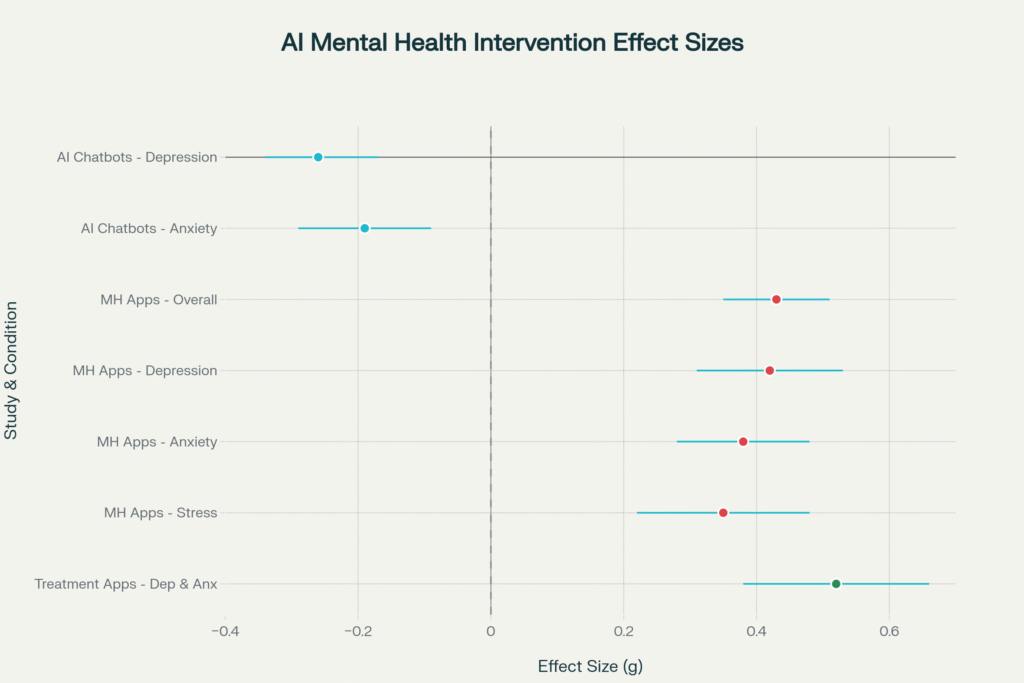

Recent meta-analytic evidence demonstrates that AI-based chatbot interventions show significant promise in addressing common mental health conditions. A comprehensive meta-analysis of 18 randomized controlled trials involving 3,477 participants found that AI chatbots produced meaningful improvements in depressive symptoms (effect size g = -0.26, 95% CI = -0.34, -0.17) and anxiety symptoms (effect size g = -0.19, 95% CI = -0.29, -0.09). The most significant benefits emerged after 8 weeks of treatment, though effects diminished at three-month follow-up, highlighting questions about long-term sustainability.[1]

Leading AI-powered platforms have demonstrated clinical efficacy in rigorous trials. Woebot, a text-based conversational agent delivering cognitive behavioral therapy, significantly reduced depression symptoms compared to control groups in multiple randomized controlled trials. Similarly, Wysa, an AI conversational agent incorporating evidence-based CBT techniques, showed substantial mood improvements in real-world evaluations, with highly engaged users experiencing significantly greater symptom reduction than less engaged users. These findings suggest that AI chatbots can serve as effective adjuncts to traditional mental health care, particularly for individuals with mild to moderate symptoms.[2][3][4][5]

Digital Mental Health Applications

A large-scale meta-analysis encompassing 92 randomized controlled trials and 16,728 participants revealed that digital mental health applications significantly improved clinical outcomes compared to control groups, with a medium effect size (g = 0.43). The analysis found significant positive effects for interventions targeting depression, anxiety, stress, and body image concerns, while no significant effects were observed for psychosis, suicide prevention, or postnatal depression interventions.[6]

However, the evidence base reveals concerning methodological limitations. A comprehensive quality assessment of 176 RCTs conducted between 2011 and 2023 found minimal improvements in methodological rigor over time. Less than 20% were replication trials, fewer than 35% reported adverse events, and high dropout rates remained problematic. These findings underscore the need for more rigorous evaluation standards in the digital mental health field.[7]

Treatment-Specific Applications

Systematic review evidence indicates that treatment apps targeting specific conditions show particular promise. Studies of six treatment mental health apps found that four demonstrated statistically significant effects, reducing symptoms of acrophobia, depression, and anxiety while improving quality-of-life metrics. Self-monitoring applications showed mixed results, with one study reporting reduced hospitalizations, relapses, and urgent care visits, though other studies found no significant clinical impact.[8]

🎥 Watch the Highlights

A visual summary that brings the key points to life.

Evidence Base and Clinical Effectiveness

Robust Clinical Trials

The strongest evidence comes from well-designed randomized controlled trials of specific platforms. Woebot studies consistently demonstrate significant reductions in depression and anxiety symptoms, with participants appreciating both the therapeutic process and psychoeducational content. Cross-sectional analysis of over 36,000 Woebot users revealed working alliance scores comparable to traditional CBT methods, suggesting high acceptability across diverse demographics.[9][3]

Wysa research includes real-world effectiveness studies showing significant mood improvements, particularly among highly engaged users. A clinical trial found Wysa effective in managing chronic pain and associated depression and anxiety, leading to FDA Breakthrough Device Designation. This regulatory recognition represents a significant milestone for AI-powered mental health interventions.[2][10]

Engagement and Retention Challenges

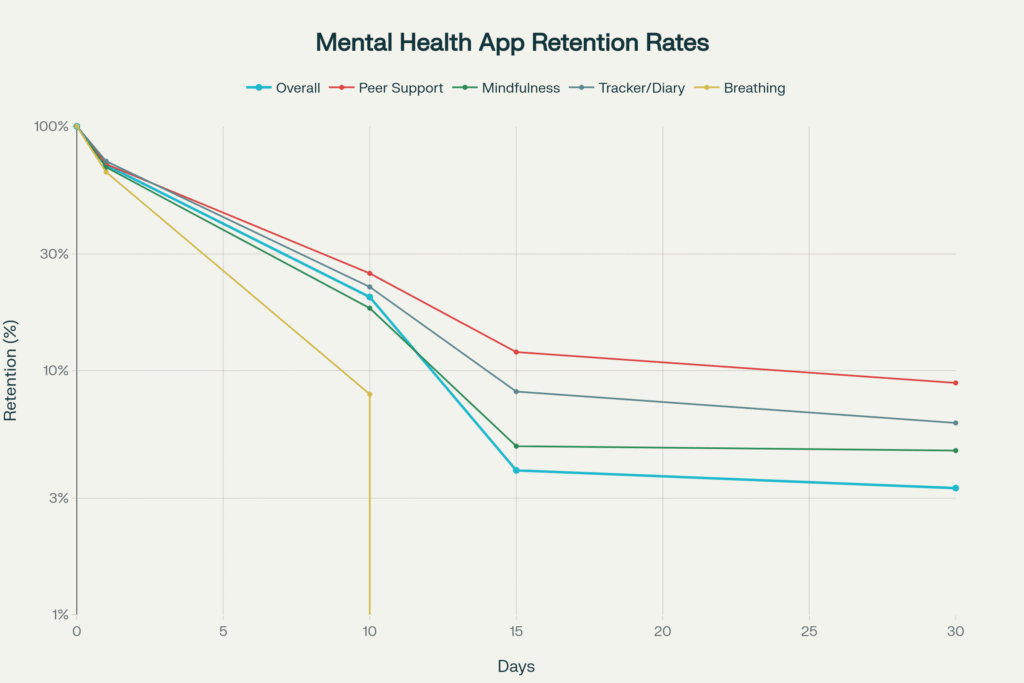

Despite clinical efficacy, user engagement remains a critical limitation. Research reveals dramatic dropout patterns, with median 15-day retention rates of only 3.9% and 30-day retention rates of 3.3% across mental health apps. The most severe decline occurs between day 1 and day 10, with over 80% of users discontinuing use. Different app types show varying retention patterns, with peer support apps achieving higher 30-day retention (8.9%) compared to breathing exercise apps (0.0%).[11]

Factors influencing sustained engagement include personalization, therapeutic alliance, habit formation, and alignment with user goals. Studies emphasizing digital phenotyping and personalized app recommendations demonstrate improved engagement outcomes, suggesting that matching interventions to individual user characteristics may enhance long-term utilization.[12][13]

📊 Explore the Interactive Dashboard

Dive deeper with dynamic tools and data you can explore yourself.

Privacy and Security Concerns

Widespread Vulnerabilities

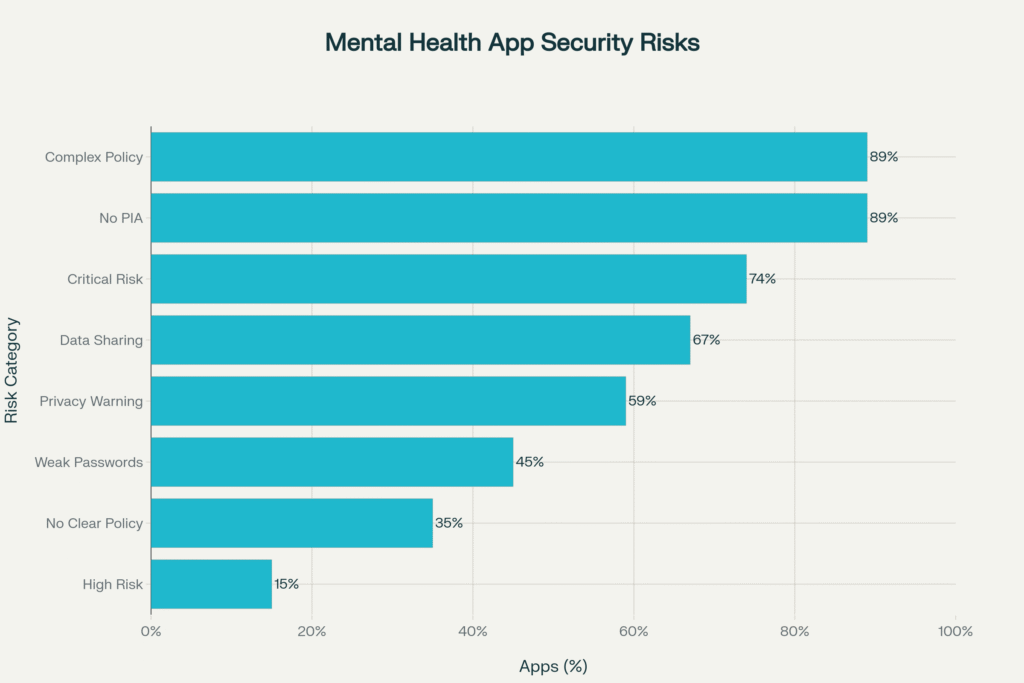

Mental health apps face alarming privacy and security challenges that potentially compromise user safety and trust. Comprehensive security analysis reveals that 74% of mental health apps pose critical security risks, with an additional 15% classified as high risk. These vulnerabilities include weak cryptography, insecure data storage, and inadequate authentication protocols.[14]

Privacy policy analysis demonstrates concerning trends in data protection practices. Mozilla’s 2023 evaluation found that 59% of popular mental health apps received “Privacy Not Included” warning labels, indicating serious privacy concerns. Common issues include sharing sensitive data with third parties for advertising purposes, requiring permissions beyond app functionality, and providing privacy policies that require college-level education to understand.[15][14]

Data Sharing and Third-Party Access

Research consistently identifies unauthorized data sharing as a primary concern among mental health app users. Studies reveal that mental health app users fear confidential information being leaked to third parties, potentially affecting professional, personal, and social domains of their lives. The Federal Trade Commission’s $7.8 million fine against BetterHelp for sharing sensitive mental health data with advertisers despite confidentiality assurances exemplifies these concerns.[15][16]

Only 22% of bipolar disorder apps and 29% of suicide prevention apps provide clear privacy policies explaining data usage. This lack of transparency, combined with inadequate consent processes, creates significant risks for vulnerable populations seeking mental health support through digital platforms.[16]

Regulatory Responses

The lack of HIPAA protection for most mental health apps creates a regulatory gap that enables extensive data collection and sharing. Unlike healthcare providers and insurers, independent apps face no comprehensive federal privacy law in the United States regulating their data practices. This regulatory vacuum allows apps to legally share, sell, or use sensitive mental health data unless they voluntarily adopt stricter policies.[17]

Regulatory Frameworks and Global Standards

WHO Digital Health Guidelines

The World Health Organization has established frameworks for artificial intelligence in mental health research, emphasizing the need for ethical standards and comprehensive evaluation methods. WHO’s Regional Digital Health Action Plan for 2023-2030 recognizes the potential of predictive analytics and AI for mental health while acknowledging significant implementation challenges.[18]

WHO guidance emphasizes three key pillars for AI in health: fostering digital frontiers, nurturing AI ecosystems for safety and equity, and advancing Sustainable Development Goals. The organization advocates for responsible AI development, comprehensive stakeholder collaboration, and robust ethical frameworks to mitigate risks while maximizing public health benefits.[19]

UK Regulatory Approach

The UK Medicines and Healthcare products Regulatory Agency (MHRA) has developed comprehensive guidance for digital mental health technologies, clarifying when products qualify as medical devices requiring regulatory oversight. The February 2025 guidance establishes that digital mental health technologies designed to diagnose, prevent, monitor, or treat conditions using complex software must meet medical device standards.[20][21]

The MHRA framework emphasizes three critical areas: defining intended purpose, determining medical device qualification, and establishing risk-based classification systems. Products making medical claims face stricter regulation, while general wellness applications may avoid regulatory requirements. This approach aims to balance innovation with safety while providing clear guidance for developers.[22][23]

Indian Policy Framework

India has implemented comprehensive telemedicine and telepsychiatry guidelines that directly impact digital mental health services. The Telemedicine Practice Guidelines 2020, released by the Ministry of Health and Family Welfare, provide the statutory basis for remote mental health consultations. These guidelines are integrated with existing legislation including the Mental Healthcare Act 2017, Information Technology Act 2000, and various drug regulation frameworks.[24][25]

The Telepsychiatry Operational Guidelines 2020, developed collaboratively by NIMHANS, the Indian Psychiatric Society, and the Telemedicine Society of India, offer practical guidance for implementing telepsychiatry services. These guidelines address critical issues including informed consent, record maintenance, prescription protocols, and integration with traditional care delivery systems.[25][26]

Indian Government Initiatives

TeleMANAS Program

India’s National Tele Mental Health Programme (TeleMANAS) represents a comprehensive government response to the mental health crisis, providing 24/7 free mental health support through a toll-free helpline (14416). Launched on World Mental Health Day 2022, the program operates across all states and union territories, offering services in over 20 languages.[27][28]

TeleMANAS utilizes a two-tier system: Tier 1 comprises state cells with trained counselors providing immediate support, while Tier 2 includes specialists offering advanced referral services. Since launch, the program has handled over 20 lakh calls, with 36 states establishing 53 TeleMANAS cells. The October 2024 launch of the TeleMANAS mobile application further expanded accessibility, providing comprehensive support for mental health issues ranging from general well-being to serious mental disorders.[29][30][31]

AIIMS “Never Alone” Initiative

The All India Institute of Medical Sciences Delhi launched the “Never Alone” AI-powered mental health application in September 2025, specifically targeting student mental health and suicide prevention. The web-based platform, accessible via WhatsApp, provides 24/7 screening, intervention, and follow-up services at a cost of only 70 paise per student per day.[32][33][34][35]

The initiative addresses India’s alarming suicide statistics, with over 1.7 lakh deaths by suicide in 2022, and young adults aged 18-30 accounting for 35% of all suicides. The app utilizes the Diagnostic and Statistical Manual of Mental Disorders (DSM) for clinical evaluation and has been implemented at AIIMS Delhi, AIIMS Bhubaneswar, and the Institute of Human Behaviour and Allied Sciences.[36][37][38]

Platform-Specific Evidence

YourDOST: Indian Online Counseling Model

YourDOST represents India’s pioneering online emotional wellness platform, connecting users with psychologists, counselors, and life coaches through various consultation modalities. Founded to address mental health stigma and accessibility barriers, the platform serves over 600 organizations and has touched 30+ lakh people while reportedly saving 10,000+ lives.[39][40][41]

The platform operates through institutional partnerships, with organizations subscribing to provide free access to their communities. YourDOST offers services in 20+ Indian languages, ensuring accessibility across diverse populations. The platform’s approach emphasizes anonymous consultations, mood tracking, and comprehensive self-help resources while maintaining strict privacy and confidentiality standards.[40][41]

Wysa: Evidence-Based AI Intervention

Wysa has established a robust clinical evidence base through independent peer-reviewed trials examining effectiveness across depression, anxiety, chronic pain, and maternal mental health. The platform has received FDA Breakthrough Device Designation following clinical trials demonstrating effectiveness comparable to in-person psychological counseling.[10]

Research consistently shows that higher engagement with Wysa correlates with improved mental health outcomes. The platform achieves working alliance scores comparable to traditional CBT methods, with users reporting high therapeutic bond ratings. Real-world evaluations demonstrate significant mood improvements, particularly among users with chronic conditions and maternal mental health challenges.[2][4][42][43]

Woebot: Rigorous Clinical Validation

Woebot maintains the most extensive peer-reviewed evidence base among AI mental health chatbots, with multiple randomized controlled trials demonstrating efficacy across diverse populations. Studies consistently show significant reductions in depression and anxiety symptoms, with high user engagement and satisfaction ratings.[9][3][5]

The platform’s evidence spans various applications, including substance use behavior modification, postpartum mood management, and stress reduction among healthcare workers. Cross-sectional analysis of over 36,000 users reveals therapeutic alliance scores comparable to traditional therapy modalities, suggesting broad acceptability and effectiveness.[3]

Opportunities in Mental Health Technology

Scalability and Access

Digital mental health technologies offer unprecedented opportunities to address treatment gaps and accessibility barriers. AI-powered interventions can provide immediate, 24/7 support without geographical limitations, potentially reaching millions of individuals who lack access to traditional mental health services. This scalability is particularly crucial in low- and middle-income countries where mental health professional shortages severely limit care availability.[44]

The cost-effectiveness of digital interventions enables population-level implementation at relatively modest financial investment. India’s TeleMANAS program exemplifies this potential, providing comprehensive mental health support to over 20 lakh individuals through a coordinated national framework. Similarly, institutional partnerships like YourDOST’s model demonstrate how digital platforms can provide free mental health services to large communities through organizational subscriptions.[41][27]

Stigma Reduction and Anonymity

Digital platforms offer crucial advantages in reducing mental health stigma by providing anonymous, discreet access to support services. Users can engage with mental health resources without fear of social judgment or discrimination, potentially encouraging earlier intervention and preventing symptom escalation. This anonymity is particularly valuable in cultures where mental health stigma remains pronounced.[44]

Research indicates that users appreciate the discreteness of digital mental health tools, valuing their ability to seek help without revealing their mental health status to others. The anonymous nature of many platforms enables individuals to explore mental health resources and develop coping strategies before potentially transitioning to traditional care modalities.[45]

Personalization and Adaptive Interventions

Advanced AI capabilities enable highly personalized mental health interventions that adapt to individual user characteristics, preferences, and progress patterns. Digital phenotyping approaches can analyze user behavior patterns to provide tailored recommendations and optimize engagement strategies. Machine learning algorithms can continuously refine intervention strategies based on user responses and outcomes.[44][12]

Just-in-time adaptive interventions represent a particularly promising application, providing immediate support during moments of crisis or elevated distress. These systems can detect changes in user behavior or mood indicators and automatically deliver appropriate interventions, potentially preventing symptom exacerbation or crisis situations.[46]

Integration with Traditional Care

Digital mental health technologies increasingly serve as valuable adjuncts to traditional therapy rather than replacements. Blended care models that combine digital tools with human therapist support show enhanced outcomes compared to either modality alone. These hybrid approaches can extend therapeutic engagement between sessions while providing continuous monitoring and support.[44][46]

Integration opportunities include pre-therapy preparation, homework assignments, progress tracking, and relapse prevention. Digital tools can also facilitate communication between patients and providers, enabling more informed clinical decision-making and personalized treatment planning.

Risks and Challenges

Clinical Safety and Misdiagnosis

Digital mental health technologies present significant clinical risks, particularly regarding diagnostic accuracy and appropriate crisis response. AI systems may provide inaccurate assessments or miss critical warning signs that human clinicians would identify. The risk of misdiagnosis or inappropriate treatment recommendations could potentially worsen user conditions or delay necessary professional intervention.[47]

Crisis management represents a particular challenge, as digital systems may inadequately respond to suicidal ideation or severe psychiatric emergencies. While some platforms include crisis resources and escalation protocols, the effectiveness of these safety measures requires ongoing evaluation and improvement.[47]

Data Privacy and Security Vulnerabilities

The extensive privacy and security vulnerabilities identified in mental health apps pose serious risks to user safety and confidentiality. Unauthorized data sharing, weak encryption, and inadequate access controls create potential for identity theft, discrimination, and emotional harm. The sensitive nature of mental health data amplifies these risks, as disclosure could affect employment, insurance, and social relationships.[14][16]

Long-term data storage practices raise additional concerns, as many apps fail to specify data retention periods or deletion procedures. Users may unknowingly consent to indefinite storage of highly sensitive information, creating ongoing privacy risks that persist beyond app usage.[17]

Regulatory Gaps and Oversight

The current regulatory environment creates significant gaps in oversight and quality assurance for mental health technologies. Most mental health apps operate outside healthcare regulations, lacking requirements for clinical validation, safety monitoring, or adverse event reporting. This regulatory vacuum enables the proliferation of potentially harmful or ineffective interventions without adequate oversight.[17][48]

The absence of standardized evaluation criteria makes it difficult for users, clinicians, and policymakers to assess app quality and safety. Inconsistent regulatory approaches across jurisdictions further complicate global implementation and quality assurance efforts.

Digital Divide and Accessibility

Digital mental health technologies may exacerbate existing healthcare disparities by creating new barriers for vulnerable populations. Individuals lacking smartphone access, digital literacy, or reliable internet connectivity cannot benefit from these interventions, potentially widening treatment gaps rather than closing them.[46]

Socioeconomic factors, age, and cultural background significantly influence digital technology adoption and engagement. Without careful attention to accessibility and cultural adaptation, digital mental health solutions risk serving primarily privileged populations while neglecting those with greatest need.

User Selection Criteria and Practical Guidance

Evidence-Based Selection Framework

Research identifies key criteria that users should consider when selecting mental health applications, prioritizing accessibility, security, and scientific grounding. Young adults consistently rank accessibility as the most important factor, followed by intervention quality, security features, customizability, and usability. Cost considerations, condition-specific support, and feature availability also significantly influence app selection decisions.[13][49]

The American Psychiatric Association’s App Evaluation Framework provides a comprehensive structure for assessing mental health technologies across five key domains: background and accessibility, privacy and security, clinical foundation, therapeutic goal, and engagement style. This framework offers systematic guidance for users, clinicians, and researchers evaluating digital mental health tools.[45][50]

Privacy and Security Assessment

Users should prioritize apps with clear, comprehensive privacy policies that explicitly describe data collection, storage, and sharing practices. Essential security features include strong password protection, data encryption, and user control over data sharing. Users should verify that apps provide transparent information about third-party data sharing and offer options to limit or prevent such sharing.[51]

Critical warning signs include vague privacy policies, requests for unnecessary device permissions, and lack of contact information for privacy-related concerns. Users should avoid apps that require extensive personal information during registration or fail to provide clear explanations of data usage practices.[51]

Clinical Foundation and Evidence

Users should seek applications that demonstrate clinical validation through peer-reviewed research, professional development involvement, or recognition by reputable healthcare organizations. Apps incorporating evidence-based therapeutic approaches such as cognitive behavioral therapy, mindfulness, or dialectical behavior therapy offer stronger clinical foundations than those based solely on general wellness concepts.[49][51]

Warning signs include exaggerated efficacy claims, lack of professional oversight, and absence of research citations or clinical validation. Users should be cautious of apps making unrealistic promises about treatment outcomes or claiming to replace professional mental health care entirely.

Practical Implementation Checklist

A comprehensive user checklist should include: 1) Verification of clinical evidence through peer-reviewed studies or professional endorsements, 2) Review of privacy policies for data protection practices and third-party sharing, 3) Assessment of crisis management features and professional support availability, 4) Evaluation of accessibility features including offline functionality and language options, 5) Consideration of cost structures and subscription requirements, and 6) Testing of user interface and engagement features through trial periods when available.[51][50]

Users should also consider their specific mental health needs, technology comfort level, and preferences for human interaction versus automated support. The selection process should include consultation with mental health professionals when appropriate, particularly for individuals with severe or complex mental health conditions.

Future Directions and Recommendations

Strengthening Regulatory Frameworks

Comprehensive regulatory frameworks must balance innovation with safety while providing clear guidance for developers, users, and healthcare providers. Regulatory agencies should establish specific criteria for mental health technology evaluation, including requirements for clinical validation, safety monitoring, and adverse event reporting. International coordination could help standardize evaluation approaches and facilitate global implementation of effective interventions.[47]

Regulatory frameworks should distinguish between general wellness apps and clinical interventions while providing proportionate oversight based on risk levels. Clear qualification criteria, classification systems, and evidence requirements would help developers navigate regulatory pathways while ensuring user safety and efficacy.[47]

Enhancing Privacy Protection

Strengthening privacy protection requires both regulatory action and industry self-regulation initiatives. Comprehensive privacy legislation specifically addressing health apps could establish minimum standards for data protection, user consent, and third-party sharing. Industry initiatives could include privacy-by-design principles, regular security audits, and transparent reporting of data practices.[48]

Technical improvements should include stronger encryption standards, user-controlled data sharing options, and enhanced authentication protocols. Privacy impact assessments should become standard practice for mental health technology development, with public reporting of privacy practices and security measures.[14]

Improving Clinical Integration

Successful integration of digital mental health technologies into healthcare systems requires comprehensive planning, provider training, and systematic evaluation approaches. Healthcare organizations should develop clear protocols for digital tool selection, implementation, and monitoring while ensuring appropriate clinical supervision and oversight.[46]

Provider education programs should address digital mental health literacy, helping clinicians understand available technologies, their evidence base, and appropriate use cases. Integration efforts should emphasize complementary roles rather than replacement of traditional care, focusing on enhanced patient engagement and improved outcomes.[46]

Addressing Digital Disparities

Reducing digital health disparities requires targeted efforts to ensure equitable access to mental health technologies across diverse populations. This includes developing culturally adapted interventions, providing technology access support, and creating alternative delivery modalities for populations with limited digital access.[46]

Community-based implementation programs could help bridge digital divides by providing technology training, device access, and ongoing support for vulnerable populations. Partnerships between technology developers, healthcare organizations, and community groups could facilitate broader implementation while addressing local needs and preferences.

Conclusion

Mental health technology represents a transformative opportunity to address global mental health challenges through scalable, accessible, and evidence-based interventions. The growing body of clinical evidence demonstrates that AI-powered chatbots and digital mental health applications can produce meaningful improvements in depression, anxiety, and other mental health conditions when properly designed and implemented. Leading platforms like Woebot and Wysa have established robust evidence bases through rigorous clinical trials, while innovative initiatives like India’s TeleMANAS program and AIIMS “Never Alone” app demonstrate successful population-level implementation approaches.

However, significant challenges must be addressed to realize the full potential of mental health technology. Privacy and security vulnerabilities affect the majority of available applications, creating serious risks for vulnerable users seeking mental health support. Regulatory gaps enable the proliferation of ineffective or potentially harmful interventions without adequate oversight. User engagement remains problematic, with dramatic dropout rates limiting long-term effectiveness.

The path forward requires coordinated efforts across multiple stakeholders. Regulatory frameworks must evolve to provide appropriate oversight while fostering innovation. Privacy protection standards must be strengthened through both legislative action and industry initiatives. Clinical integration efforts should emphasize complementary roles rather than replacement of traditional care. Digital divide considerations must inform development and implementation strategies to ensure equitable access across diverse populations.

For individual users navigating this complex landscape, careful evaluation of clinical evidence, privacy practices, and safety features remains essential. The evidence-based selection criteria and practical guidance outlined in this analysis provide a foundation for informed decision-making while highlighting the importance of professional consultation when appropriate.

Mental health technology holds tremendous promise for transforming mental healthcare delivery, but realizing this potential requires sustained commitment to rigorous evaluation, ethical development, and equitable implementation. The opportunities are significant, but so are the responsibilities to ensure that these powerful tools serve the best interests of individuals and communities seeking mental health support in an increasingly digital world.

Frequently Asked Questions

What are the main benefits of using AI-powered mental health applications?

AI-powered mental health apps show promise in addressing mental health conditions by making support more accessible. A meta-analysis of 18 randomized controlled trials found that AI chatbots led to improvements in both depressive and anxiety symptoms.

How effective are digital mental health interventions?

Digital mental health interventions have demonstrated meaningful improvements in depressive and anxiety symptoms. The document notes a significant effect size in a meta-analysis of 18 randomized controlled trials.

What are the primary risks associated with mental health technology?

The rapid expansion of mental health technology has raised significant concerns regarding user privacy, the efficacy of the interventions, and the lack of proper regulatory oversight.

What are some key privacy and data security concerns with mental health apps?

Mental health apps can pose data privacy risks due to insecure implementations and the potential for user profiling. There is a high risk of user data being linked, detected, or identified by third parties, as many apps do not provide foolproof mechanisms against this.

Why is user engagement a challenge for mental health apps?

User engagement and retention are significant challenges for mental health technology. Studies show that many users download these apps but do not engage with them consistently over time.

What is the current regulatory landscape for mental health technologies?

There is growing scrutiny from governments and regulatory bodies to ensure the safety and effectiveness of digital mental health technologies. The document mentions a lack of regulatory oversight as a significant concern.

How are AI chatbots different from traditional mental health support?

AI chatbots are designed to provide support using evidence-based, clinically evaluated software. They are not licensed medical professionals and may lack the ability to build rapport or understand contextual and interpersonal cues.

Are mental health apps considered medical devices in all regions?

The classification of mental health apps varies by region. In some places, like the UK, certain digital mental health technologies that are designed to diagnose, prevent, or treat conditions are considered medical devices.

What are the potential impacts of AI bias in mental health technology?

AI models can introduce biases and failures that may lead to dangerous consequences. The document refers to a Stanford study that found AI chatbots showed increased stigma towards conditions like alcohol dependence and schizophrenia.

How can developers improve the safety and efficacy of mental health apps?

Developers should focus on improved algorithm design, regular data audits, and integrating contextual factors. Human oversight is also crucial, and AI chatbots should be used to supplement, rather than replace, human intervention.

Work Cited

- pubmed.ncbi.nlm.nih.gov

- mhealth.jmir.org

- Woebot-Health-Research-Bibliography-6.pdf

- https://www.frontiersin.org/journals/

- Woebot-Health-Research-Bibliography_May-2021-1-1.pdf

- https://www.nature.com/articles/s41746-025-01567-5

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11997814/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11815452/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11904749/

- https://www.wysa.com/clinical-evidence

- https://www.jmir.org/2019/9/e14567/

- https://formative.jmir.org/2024/1/e62725

- https://journals.plos.org/digitalhealth/article

- https://pmc.ncbi.nlm.nih.gov/articles/PMC9643945/

- https://intellect.co/read/privacy-of-mental-health-apps/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC9505389/

- https://www.privateinternetaccess.com

- https://www.who.int/europe/news

- harnessing-artificial-intelligence-for-health

- https://www.gov.uk/government/news/

- new-rules-for-digital-mental-health-apps

- https://www.hardianhealth.com/

- https://www.freyrsolutions.com/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC8554922/

- Telepsychiatry-Operational-Guidelines-2020.pdf

- https://indianpsychiatricsociety.org/

- https://www.pib.gov.in/

- know-about-india-s-free-mental-health-services-tele-manas

- https://sansad.in/

- https://www.pib.gov.in/

- https://www.iosrjournals.org/

- https://www.digitalhealthnews.com/

- https://timesofindia.indiatimes.com/

- https://www.indiatoday.in/

- https://www.hindustantimes.com/

- https://www.newsonair.gov.in/

- https://indianexpress.com/

- https://www.ocacademy.in/

- https://feminisminindia.com/

- https://happysoulindia.com/

- https://udaaniitt.in/

- https://www.sciencedirect.com/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11034576/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10982476/

- https://mental.jmir.org/2024/1/e57401

- https://pmc.ncbi.nlm.nih.gov/articles/PMC12079407/

- https://journals.sagepub.com/

- why-mental-health-apps-need-to-take-privacy-more-seriously/

- https://journals.sagepub.com/doi/10.1177/20552076221102775

- mental-health-apps/the-app-evaluation-model

- how-to-choose-a-mental-health-app-that-can-actually-help

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11344322/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11871827/

- https://journals.plos.org/

- jamanetworkopen/fullarticle/2821341

- are-therapy-chatbots-effective-for-depression-and-anxiety/

- https://www.researchprotocols.org/2025/1/e71071

- https://clinicaltrials.gov/study/NCT04507360

- science/article/pii/S2949916X24000525

- https://pubmed.ncbi.nlm.nih.gov/39933815/

- https://www.sciencedirect.com/science/article/pii/S2214782925000259

- exploring-the-dangers-of-ai-in-mental-health-care

- https://mental.jmir.org/2025/1/e67785

- https://onlinelibrary.wiley.com/doi/10.1002/wps.21299

- https://mental.jmir.org/2025/1/e64396

- https://www.sciencedirect.com/science/article/pii/S0272735824001363

- https://www.nature.com/articles/s41746-025-01778-w

- https://www.who.int/

- Telemedicine-practice-guidelines-in-India

- https://www.who.int/health-topics/digital-health

- https://pmc.ncbi.nlm.nih.gov/articles/PMC8106416/

- TeleNursing-Practice-Guideline-2020.pdf

- https://cybersecuritycrc.org.au/mental-health-apps-privacy-risks/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10911327/

- https://onlinelibrary.wiley.com/doi/10.1002/wmh3.497

- https://pmc.ncbi.nlm.nih.gov/articles/PMC6286427/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10242473/

- https://yourdost.com

- https://medinform.jmir.org/2025/1/e63538/PDF

- https://yourdost.com/become-an-expert

- https://clinicaltrials.gov/study/NCT05533190

- https://yourdost.com/press

- https://formative.jmir.org/2024/1/e47960

- https://www.emergobyul.com/news/

- https://www.mohfw.gov.in/?q=en%2Fpressrelease-242

- https://assets.publishing.service.gov.uk/

- https://telemanas.mohfw.gov.in

- mental-health-app-development

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11864090/

- https://mhealth.amegroups.org/article/view/135830/html

- https://www.ncbi.nlm.nih.gov

- https://www.sciencedirect.com/

- https://www.nice.org.uk/what-nice-does/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC8074985/

- https://www.sciencedirect.com/science/

Get Involved!

Leave a Comment